Difference between revisions of "CLOCKSS: Extracting Triggered Content"

(→CLOCKSS: Extracting Triggered Content: Clarification resulting from discussion of draft certification report) |

m (Previous change and this one approved by Tom Lipkis) |

||

| Line 15: | Line 15: | ||

Additionally, a publisher with content in CLOCKSS can request to be supplied with a copy. In such a case, the process described below is followed but the content is delivered to the requesting publisher not to a re-publishing site. | Additionally, a publisher with content in CLOCKSS can request to be supplied with a copy. In such a case, the process described below is followed but the content is delivered to the requesting publisher not to a re-publishing site. | ||

| − | The [https://www.clockss.org/clocksswiki/files/CLOCKSS_Participating_Publisher_Agreement.pdf CLOCKSS: Publisher Agreement] specifies that content must be unavailable for 6 months before it is triggered. It is important to observe that this delay is mandatory when content is being triggered ''without the consent of the publisher''. In all cases so far content has been triggered ''at the request of the publisher'', and the technical process described below has taken 2-4 weeks. | + | The [https://www.clockss.org/clocksswiki/files/CLOCKSS_Participating_Publisher_Agreement.pdf CLOCKSS: Publisher Agreement] specifies that content must be unavailable for 6 months before it is triggered. It is important to observe that this delay is mandatory only when content is being triggered ''without the consent of the publisher''. In all cases so far content has been triggered ''at the request of the publisher'', and the technical process described below has taken 2-4 weeks. |

== Overview of the Trigger Process == | == Overview of the Trigger Process == | ||

Revision as of 01:43, 25 June 2014

CLOCKSS: Extracting Triggered Content

The CLOCKSS board may decide to trigger content from the CLOCKSS archive when it is no longer available from any publisher. Reasons for doing so include:

- A publisher discontinuing an entire title.

- A publisher who has acquired a title deciding not to re-host a run of back issues.

- Disaster.

The CLOCKSS Participating Publisher Agreement paragraph 4.E states:

Released Content use terms and restrictions will be determined by an accompanying Creative Commons license (or equivalent license) chosen either by Publisher or, if Publisher fails to respond within thirty (30) days following receipt by it of the notice described above, by the CLOCKSS Board.

At present, EDINA and Stanford have volunteered to re-publish triggered content, but it is important to note that the Creative Commons license means that anyone can re-publish such content, and that re-publishing is not a core function of the CLOCKSS archive.

Once the CLOCKSS board notifies the LOCKSS Executive Director that a trigger event has occurred, the CLOCKSS Metadata Lead and assigned LOCKSS staff run a process that delivers the triggered content to the re-publishing sites, and updates a section of the CLOCKSS website with links pointing to the re-published content at both sites. In OAIS terminology, the package delivered to the re-publishing sites is a Distribution Information Package (DIP).

Additionally, a publisher with content in CLOCKSS can request to be supplied with a copy. In such a case, the process described below is followed but the content is delivered to the requesting publisher not to a re-publishing site.

The CLOCKSS: Publisher Agreement specifies that content must be unavailable for 6 months before it is triggered. It is important to observe that this delay is mandatory only when content is being triggered without the consent of the publisher. In all cases so far content has been triggered at the request of the publisher, and the technical process described below has taken 2-4 weeks.

Overview of the Trigger Process

The trigger process involves:

- identifying the triggered content in the CLOCKSS preservation network,

- extracting the triggered content from the CLOCKSS preservation network,

- preparing the content for publication on the triggered content machines,

- re-hosting triggered content on the CLOCKSS triggered content site,

- re-registering triggered article DOIs.

The following sections describe the process as it is currently implemented. It is possible that content triggered in the future might contain files whose format has become obsolete. The additional processes that would be needed in this situation are described in LOCKSS: Format Migration.

The CLOCKSS Metadata Lead is responsible for this process.

Identifying the Triggered Content

The triggering process begins by identifying the triggered content within the CLOCKSS preservation network. First, the Archival Units (AUs) that contain the title are identified by querying the database of bibliographic metadata that is compiled and maintained by each CLOCKSS box. This database contains a record of articles published for each preserved title, indexed by year, volume, and issue.

A query to this database on a production CLOCKSS box yields a list of AU identifiers (AUIDs) that identify the associated AUs (AIPs in OAIS terminology) being preserved in the CLOCKSS network. A similar query to an ingest machine identifies any triggered AUs that have yet to emerge from the ingest pipeline (see CLOCKSS: Ingest Pipeline). The AUIDs are the same on every CLOCKSS box and, as described in Definition of AIP, uniquely identify the location on each box of that AU (AIP). For content that was harvested from a publishers website, a single AU will normally contain all the files for a given volume or a year. For content obtained via file transfer from publishers, content is organized into AUs that contain titles, volumes, and issues from the same publisher that were ingested in a given calendar year.

Extracting the Triggered Content

Once the AU has been identified, the triggered content must be extracted from the CLOCKSS preservation network. If the content has already been released to the production CLOCKSS boxes, the content is extracted from the Stanford production CLOCKSS box (clockss-stanford.clockss.org) because it is the one most available to the technical staff. Content that is still in the ingest pipeline is flushed through the CLOCKSS: Ingest Pipeline to the production boxes and triggered from there

For harvested content the extraction process involves locating the directories in the CLOCKSS box repository that correspond to each AU of the triggered content. Each of the hierarchies under these directories is included in the DIP. If all content is on the Stanford CLOCKSS box, it can be exported directly into zip or tar files using the LOCKSS daemon's export functionality.

For file transfer content, the extraction process involves locating the directories in the CLOCKSS box that correspond to the AUs that contain the triggered content. Within these directories are either sub-directories or archive files that contain the content. These directories or archive files are copied from the repository to a server where the triggered content will be prepared for publication.

In both cases, a check is performed in case some of the extracted content includes material that is recorded as having been retracted or withdrawn, as described in CLOCKSS; Ingest Pipeline. If so, that content is excluded before the trigger process continues.

Preparing File Transfer Content for Dissemination

File transfer content is typically a collection of files that include PDFs of articles, XML formatted full-text files, supporting files such as images and multi-media, and metadata files that contain bibliographic information. These files are input to the processes of the publishing platform that displays the publisher's content to readers. The exact content of the collection varies between publishers. Typically, further processing involving the following steps is required to extract the content from the directories or archive files that were copied from the CLOCKSS box repository, and generate from them a web site:

- Running the script that verifies the MD5 checksums stored with the content.

- Unpacking any archive files that contain the content into their own directories and isolate the files that correspond to the triggered content. Publishers tend to group files for individual issues into their own directories, so isolating the files involves retaining only those directories that correspond to the content being triggered and discarding directories for other content.

- Adding any full-text PDF files unmodified to the web site.

- Adding other files, such as images and multi-media, to the web site.

- Rendering any XML files into readable full-text HTML pages.

- Generating HTML abstract pages linking to the full-text PDF and HTML pages.

- Inserting article-level bibliographic metadata into the abstract pages <meta> tags.

- Creating article-level metadata files in standard forms such as RIS and BibTex, and linking to them from the abstract pages.

- Generating an HTML issue table of contents page with links to the article abstract pages.

- Generating a volume table of contents page with links to the issue table of contents pages.

- Generating a journal table of contents page with links to the volume table of contents pages.

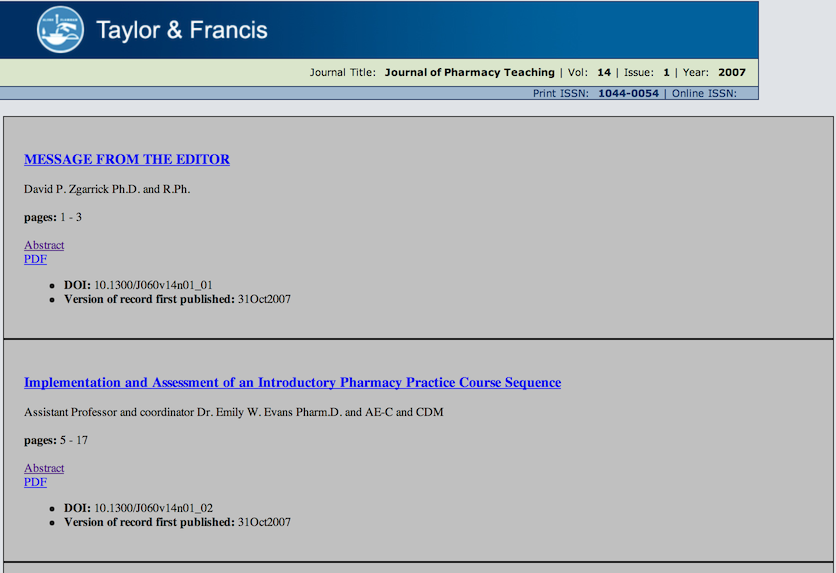

This process takes place on a temporary preparation server. Here is a sample generated issue table of contents page:

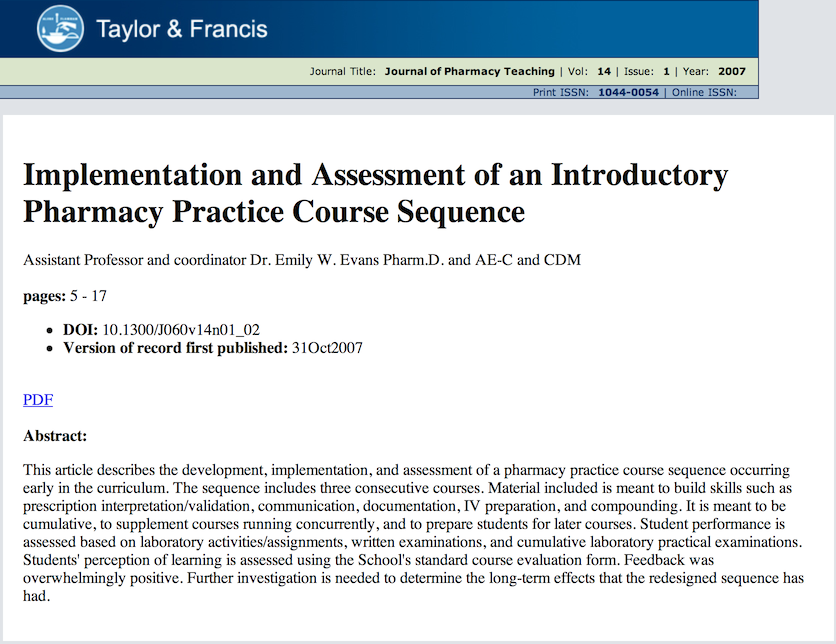

Here is a sample generated article abstract page:

Here is an example of Dublin Core article-level metadata included in the generated abstract page shown above:

<meta http-equiv="Content-Type" content="text/html; charset=utf-8"> <meta name="dc.Format" content="text/HTML"> <meta name="dc.Publisher" content="Taylor & Francis"> <meta name="dc.Title" content="Implementation and Assessment of an Introductory Pharmacy Practice Course Sequence "> <meta name="dc.Identifier" scheme="coden" content="Journal of Pharmacy Teaching, Vol. 14, No. 1, 2007: pp. 5–17"> <meta name="dc.Identifier" scheme="doi" content="10.1300/J060v14n01_02"> <meta name="dc.Date" content="31Oct2007"> <meta name="dc.Creator" content=" Assistant Professor and coordinator Dr. Emily W. Evans Pharm.D. and AE-C and CDM"> <meta name="keywords" content=", Education, pharmacy practice, laboratory education">

The result of this process is a directory hierarchy capable of being exported by a web server such as Apache. Initially, this was the form in which file transfer content was disseminated to the re-publishing sites, which used Apache directly. Now, the content is exported by Apache running on the preparation server, and ingested in the normal way by a LOCKSS daemon, after which the generated directory hierarchy can be deleted. This results in a set of AUs analogous to those which would have been obtained had the LOCKSS daemon ingested the content from the original publisher, albeit with a different look and feel. As time permits, early triggered content will be re-disseminated using this technique, as it provides better compatibility with link resolvers and other library systems.

Assembling the DIP

The AUs to be re-published can be collected from the selected CLOCKSS box and ingest machines (for harvested content) or from the preparation server (for file transfer content) and converted to a compressed archive using zip or tar. This forms a DIP that can be transferred to the re-publishing sites using rsync, sftp or scp.

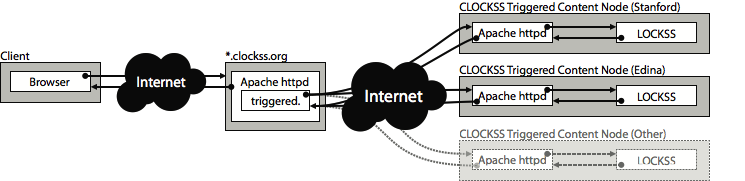

Re-publishing the Triggered Content

Currently, two sites re-publish triggered content, Stanford University and EDINA at the University of Edinburgh. Both use a combination of an Apache web server and a LOCKSS daemon to do so although, as described in Definition of AIP, the structure of AUs allows easy access by other tools to the content and metadata. Other ways to re-publish the content delivered as this type of DIP are easy to envisage, such as a shell script to convert it to an Apache web site.

The technique for re-publishing newly triggered content delivered as this type of DIP used by the current re-publishing sites is as follows. On each of the re-publishing machines:

- Configure the appropriate plugin and Title Database (TDB) entries, by updating the triggered-content configuration and plugin repositories on props.lockss.org (see LOCKSS: Property Server Operations).

- Force the LOCKSS daemon to reload its configuration and plugins from the repository.

- Unpack the AUs into the repository hierarchy of the LOCKSS daemon.

- Make a visual check of the resulting website.

The final step is add an entry for the the newly triggered title to the index of triggered titles on the “Triggered Content” section of the CLOCKSS website.

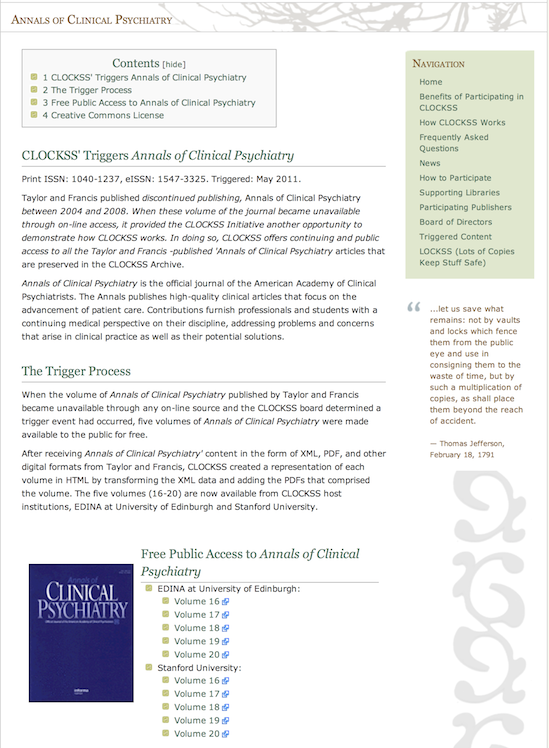

The entry points to a new landing page for the title that provides information about the title, publication history, and triggering process. It also includes links to the issues hosted at Edina and Stanford.

Because the triggered content carries Creative Commons licenses, other institutions can also re-publish it. For example, here is Annals of Clinical Teaching at the Internet Archive.

Re-registering Triggered Article DOIs

Most publishers register individual article Digital Object Identifiers (DOIs) with a registrar sponsored by DOI International, such as CrossRef. Once a title is no longer available from the publisher, the registration records for the articles should be updated to refer to the content hosted at Edina and Stanford. This is done by preparing a tab-separated file with the DOI for each article and the corresponding new URL. A separate file is required for the articles hosted at Edina and Stanford. The registrar uses the data in this file to update the records for the corresponding DOIs.

For content that is being served by the two re-publishing servers, this task is simple because their LOCKSS daemon provides an OpenURL resolver, allowing access to articles via their DOIs. The DOI information is available from the article metadata, via the DOI link in the daemon UI. Here is a portion of the file for a title being hosted at Edina that can be sent to the DOI registrar.

10.1300/J060v09n02_04 http://triggered.edina.clockss.org/ServeContent?rft_id=info:doi/10.1300/J060v09n02_04 10.1300/J060v09n02_05 http://triggered.edina.clockss.org/ServeContent?rft_id=info:doi/10.1300/J060v09n02_05 10.1300/J060v09n02_07 http://triggered.edina.clockss.org/ServeContent?rft_id=info:doi/10.1300/J060v09n02_07 10.1300/J060v09n02_08 http://triggered.edina.clockss.org/ServeContent?rft_id=info:doi/10.1300/J060v09n02_08 10.1300/J060v09n01_02 http://triggered.edina.clockss.org/ServeContent?rft_id=info:doi/10.1300/J060v09n01_02 10.1300/J060v09n01_03 http://triggered.edina.clockss.org/ServeContent?rft_id=info:doi/10.1300/J060v09n01_03

Recording the Dissemination

Generation of a DIP is performed only at the direction of the CLOCKSS board, which is recorded in their minutes.

Configuring the Re-publishing Systems

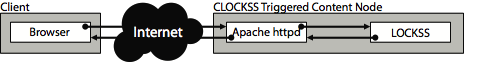

The current re-publishing sites each comprise a LOCKSS daemon and an Apache web server. Both currently reside on the same machine, but they could also be hosted on separate machines. The Apache web server is running on the standard HTTP port 80, while the LOCKSS daemon is serving content on port 8082. External access to the LOCKSS daemon is limited to administrative uses and the Apache server on the system where it is running.

The Apache server is running a proxy module that forwards requests matching a certain pattern to the CLOCKSS daemon's content server. A triggered content request is first handled by the node's Apache web server, where the request is processed through the Apache web server's ProxyPassMatch filter. If the request matches a filter pattern, the request is passed to the LOCKSS daemon's ServeContent servlet.

If the requested content is in the AUs of the LOCKSS daemon, the page is returned to the Apache web server and then to the user. Otherwise, the daemon returns an HTTP error response, and the Apache web server returns a page that indicates the content was not preserved. This configuration serves two purposes. The first is to provide additional processing capabilities beyond those available from the LOCKSS daemon. The second is so that external access to the re-published content can be made through a standard port, an important consideration for many IT firewall configurations.

For file transfer content that was triggered using the earlier technique and has yet to be updated, the Apache server acts as a normal web server that responds to requests for the prepared content.

Virtual machine requirements

Re-publishing hosts have modest requirements:

- Processor: Single core CPU at 2GHz or better

- Memory 1GB

- Storage: 40GB

- Network: 10/100mbit

Setting up the CLOCKSS daemon

Re-publishing hostconfig:

The LOCKSS daemons at the re-publishing sites are part of the clockss-triggered preservation group. When running hostconfig on a new CLOCKSS Triggered Content Node, care needs to be taken to configure it as such:

Props URL: http://props.lockss.org:8001/clockss-triggered/lockss.xml Preservation Group: clockss-triggered

Re-publishing ServeContent servlet:

The LOCKSS daemon needs to have its ServeContent servlet enabled on port 8082. This is done by logging into the LOCKSS daemon's administrative UI, clicking on "Content Access Options" then "Content Server Options" and then checking "Enable content server on port 8082".

Setting up the Apache server

The following configuration is used for the Apache server at each re-publishing site:

NameVirtualHost triggered.SITE.clockss.org:80

<VirtualHost triggered.SITE.clockss.org:80>

ServerAdmin support@support.clockss.org

DocumentRoot /var/www/html

ServerName triggered.SITE.clockss.org

<IfModule mod_proxy.c>

ProxyRequests Off

ProxyVia On

<Proxy triggered.SITE.clockss.org/*>

AddDefaultCharset off

Order deny,allow

Allow from all

</Proxy>

ProxyPassMatch ^/((ServeContent|images).*)$ http://localhost:8082/$1

ProxyErrorOverride On

ErrorDocument 404 /not-preserved.html

</IfModule>

ErrorLog logs/error_log

CustomLog logs/access_log common

</VirtualHost>

Managing Re-publishing Sites

The re-publishing site should follow the security, maintenance and upgrade guidelines for CLOCKSS boxes.

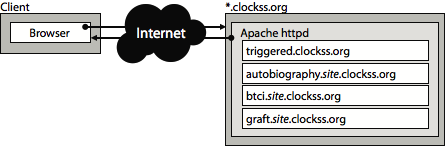

Balancing load across CLOCKSS triggered content nodes

A load balancing Apache server is also configured that provides a single point of access to the re-publishing servers at EDINA and Stanford. It is here. This additional Apache instance serves two purposes: the first is to provide a single URL for services that can accept only a single URL, such as link resolvers. The second purpose is to ensure high availability of the triggered content.

Here is the configuration file for this load balancing Apache server.

<VirtualHost *>

ServerName triggered.clockss.org

ServerAdmin support@support.clockss.org

DocumentRoot /var/www/clockss-triggered

<IfModule mod_proxy.c>

ProxyRequests off

ProxyVia On

ProxyPassMatch ^/((ServeContent|images).*)$ balancer://triggered-pool/$1

<Proxy balancer://triggered-pool>

BalancerMember http://triggered.edina.clockss.org/

BalancerMember http://triggered.stanford.clockss.org/

ProxySet lbmethod=byrequests

</Proxy>

</IfModule>

CustomLog /var/log/apache2/access.log combined

ErrorLog /var/log/apache2/error.log

ServerSignature On

</VirtualHost>

Change Process

Changes to this document require:

- Review by:

- CLOCKSS Content Lead

- CLOCKSS Network Administrator

- Approval by CLOCKSS Technical Lead