LOCKSS: Polling and Repair Protocol

Contents |

LOCKSS: Polling and Repair Protocol

Overview

LOCKSS boxes run the LOCKSS polling and repair protocol as described in our ACM Transactions on Computer Systems paper. The paper describes the polling mechanism as applying to a single file; the LOCKSS daemon applies it to an entire Archival Unit (AU) of content. Each LOCKSS daemon chooses at random the next AU upon which it will use the LOCKSS polling and repair protocol to perform integrity checks. It acts as the poller to call a poll on that AU by:

- Selecting a random sample of the other CLOCKSS boxes (the voters).

- Inviting the voters to participate in a poll on the AU, and sending each of them a freshly-generated random nonce Np.

- The poll involves the voters voting by:

- Generating a fresh random nonce Nv.

- Creating a vote containing, for every URL in the voter's instance of the AU:

- The URL

- The hash of the concatenation of Np, Nv and the content of the URL.

- Sending the vote to the poller. Note that the vote contains a hash for each URL in the voter's instance of the AU, but that hash is not the hash of the content. The nonces ensure that the hash in the vote is different for every vote in every poll. The voter cannot simply remember the hash it initially created, it must re-hash every URL each time it votes.

- The poller tallies the votes by:

- For each URL in the poller's instance of the AU:

- For each voter:

- Computing the hash of Np, Nv and the content of the URL in the poller's instance of the AU.

- Comparing the result with the hash value for that URL in that voter's vote.

- For each voter:

- Note that the nonces ensure that the poller must re-hash every URL in the AU; it cannot simply remember the hash it initially created.

- For each URL in the poller's instance of the AU:

- In tallying the votes, the poller may detect that:

- A URL it has does not match the consensus of the voters, or

- A URL that the consensus of the voters says should be present in the AU is missing from the poller's AU, or

- A URL it has does not match the checksum generated when it was stored.

- If so, it repairs the problem by:

- requesting a new copy from one of the voters that agreed with the consensus,

- then verifying that the new copy does agree with the consensus.

In this way, at unpredictable but fairly regular intervals, every poll on an AU checks the union of the set of URLs in that AU on the box calling the poll (poller) and the boxes voting (voters). The check establishes that the URL on the poller agrees with the consensus of the boxes voting in the poll (voters) as to that URL's content. If it does not, it is repaired from one of the boxes in the consensus. Under our current Mellon grant we are investigating the potential benefits of an enhancement to the mechanism that results in every poll on an AU checking that every URL in that AU on each voter agrees with the same URL on the poller.

Configuration of CLOCKSS Network

As described in CLOCKSS: Box Operations the CLOCKSS boxes are configured to form a Private LOCKSS Network (PLN) including the following configuration options:

- Because the CLOCKSS PLN is a closed network secured by SSL certificate checks at both ends of all connections, the defenses against sybil attacks, which involve the adversary creating new peer identities, are not necessary and are not implemented.

- The efficiency enhancements described below are being gradually and cautiously deployed to the CLOCKSS PLN.

Currently, on average, a poll is called on each AU instance approximately once every 100 days. Since there are currently 12 boxes in the CLOCKSS network, approximately every 8 days on average one instance of a given AU is checked.

Enhancements

The LOCKSS team's internal monitoring and evaluation processes identified some areas in which the efficiency of the polling process could be improved in the context of the Global LOCKSS Network (GLN). The Andrew W. Mellon Foundation funded work to implement and evaluate improvements in these areas; the grant period extends through March 2015. Although these improvements will be deployed to the CLOCKSS network, because there are many fewer boxes in the CLOCKSS network than the GLN the areas of inefficiency are less relevant to the CLOCKSS network. Thus the improvements are not expected to make a substantial difference to the performance of the CLOCKSS network.

The Mellon-funded work included development of improved instrumentation and analysis software, which polls the administrative Web UI of each LOCKSS box in a network to collect vast amounts of data about the operations of each box. These tools were used on the CLOCKSS network for an initial 59-day period, collecting over 18M data items. The data collected has yet to be fully analyzed but initial analysis shows that the polling process among CLOCKSS boxes continues to operate satisfactorily. Some examples of the graphs generated follow.

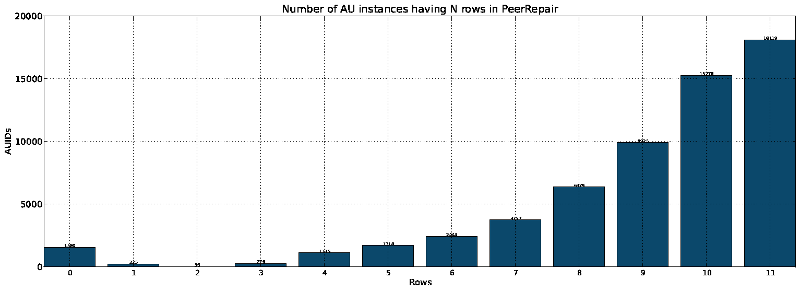

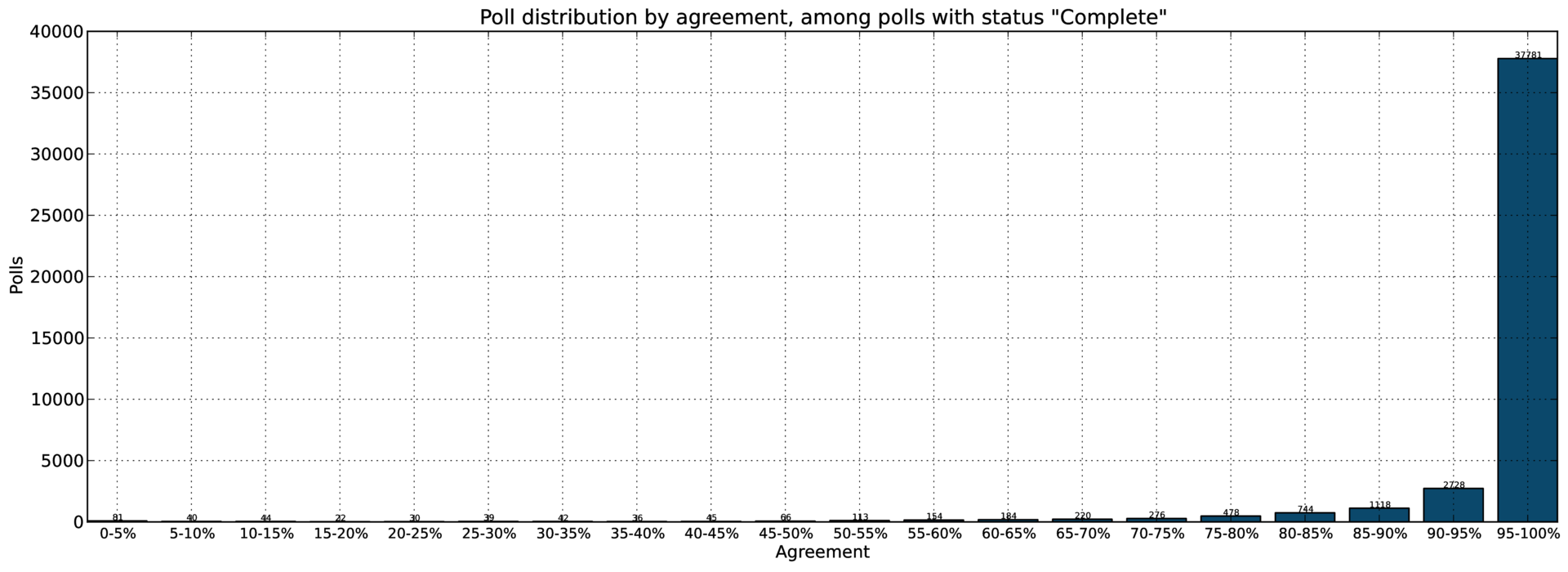

This graph shows the number of AU instances in CLOCKSS boxes which have reached agreement with N other CLOCKSS boxes, showing the progress AUs make after ingest as the LOCKSS: Polling and Repair Protocol identifies matching AU instances at other boxes. It will be seen that there are few AU instances in the sample with few boxes with whom they have reached agreement, and that the majority of AU instances have reached agreement with AU instances at the majority of other CLOCKSS boxes. This graph shows the extent of agreement among the over 40,000 successfully completed polls in the sample. As can be seen, the overwhelming majority of the polls showed complete agreement. Polls with less than complete agreement are likely to have been caused by polling among AU instances that were still collecting content, so had different sub-sets of the URLs in an AU.Demonstration

The CRL auditors requested a demonstration of the polling and repair process. Demonstrating this on production content is difficult. The content is generally large, so polls take a long time. Each box is running many polls simultaneously, so the log entries for these polls are interleaved. Turning the logging level on polling up enough to show full details would affect all polls underway simultaneously, so the volume of log data would be overwhelming. Instead, we provided a live demonstration using a network of 5 LOCKSS daemons in the STF testing framework, preserving an AU of synthetic content. It consisted of two polls, the first detected no damage and the second created, detected and repaired damage to the content of one URL. Annotated logs of the first poll are available from the poller and a voter. Annotated logs of the second poll are available from the poller and a voter.

Replicating the Demonstration

These demos have been included in the 1.66 release of the LOCKSS software. These instructions have been tested on a vanilla install of Ubuntu 14.04.1, up-to-date as of August 4. They should work on other recent Debian-based Linux systems.

The first step is to install the pre-requisites:

foo@bar:~$ cd foo@bar:~$ sudo apt-get install default-jdk ant subversion libxml2-utils [sudo] password for foo: Reading package lists... 0% ... 0 upgraded, 46 newly installed, 0 to remove and 0 not upgraded. Need to get 70.6 MB of archives. After this operation, 127 MB of additional disk space will be used. Do you want to continue? [Y/n] y ... done. foo@bar:~$ ls -l /etc/alternatives/javac lrwxrwxrwx 1 root root 42 Aug 4 18:45 /etc/alternatives/javac -> /usr/lib/jvm/java-7-openjdk-i386/bin/javac foo@bar:~$ export JAVA_HOME=/usr/lib/jvm/java-7-openjdk-i386 foo@bar:~$

The next step is to check the latest release of the LOCKSS daemon out from SourceForge:

foo@bar:~$ svn checkout svn://svn.code.sf.net/p/lockss/svn/lockss-daemon/tags/last_released_daemon lockss-daemon ... foo@bar:~$

The next step is to build the LOCKSS daemon. This takes a while, there's a lot of code to build. It generates Java warnings that you should be able to ignore, but no errors. Just to be sure that everything is OK, we run the unit and functional tests on the daemon that gets built. This takes much longer, especially on the little netbook I'm using to test the instructions:

foo@bar:~$ mkdir ~/.ant foo@bar:~$ cd ~/.ant foo@bar:~$ ln -s ~/lockss-daemon/lib . foo@bar:~$ cd ~/lockss-daemon foo@bar:~/lockss-daemon$ ant Buildfile: /home/foo/lockss-daemon/build.xml ... BUILD SUCCESSFUL Total time: 81 minutes 21 seconds real 81m21.980s user 84m29.308s sys 5m13.708s foo@bar:~/lockss-daemon$

The next step is to configure STF for the demos. The demos work without this configuration, but they are much more informative with it:

foo@bar:~$ cd ~/lockss-daemon/test/frameworks/run_stf foo@bar:~/lockss-daemon/test/frameworks/run_stf$ cp testsuite.opt.demo testsuite.opt foo@bar:~/lockss-daemon/test/frameworks/run_stf$

This configuration ensures that:

- The logs contain detailed information about the polling and repair process.

- The logs aren't deleted after the demo.

- The daemons stay running until you hit Enter. This allows you use a Web browser to access the UI of the daemons and see the polling and voting status pages. See the STF README.txt file for details of how to do this.

Now you can go ahead and run the first demo in the STF test framework. It creates a network of 5 LOCKSS boxes each preserving an Archival Unit (AU) of synthetic content, and causes the first box to call a poll on it, which should result in complete agreement among the boxes:

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ python testsuite.py AuditDemo1 11:27:35.057: INFO: =================================== 11:27:35.057: INFO: Demo a V3 poll with no disagreement 11:27:35.057: INFO: ----------------------------------- 11:27:35.250: INFO: Starting framework in /home/foo/gamma/lockss-daemon/test/frameworks/run_stf/testcase-1 11:27:35.266: INFO: Waiting for framework to become ready 11:27:45.624: INFO: Creating simulated AU's 11:27:47.546: INFO: Waiting for simulated AU's to crawl 11:27:47.759: INFO: AU's completed initial crawl 11:27:47.760: INFO: No nodes damaged on client localhost:8041 11:27:47.777: INFO: Waiting for a V3 poll to be called... 11:28:18.087: INFO: Successfully called a V3 poll 11:28:18.088: INFO: Checking V3 poll result... 11:28:18.215: INFO: Asymmetric client localhost:8042 repairers OK 11:28:18.249: INFO: Asymmetric client localhost:8043 repairers OK 11:28:18.287: INFO: Asymmetric client localhost:8044 repairers OK 11:28:18.322: INFO: Asymmetric client localhost:8045 repairers OK 11:28:18.425: INFO: AU successfully polled 11:28:19.427: INFO: No deadlocks detected >>> Delaying shutdown. Press Enter to continue... 11:29:08.161: INFO: Stopping framework ---------------------------------------------------------------------- Ran 1 test in 93.213s OK foo@bar:~/lockss-daemon/test/frameworks/run_stf$

You will find that the demo has created a file system tree under testcase-1 with a directory for each of the five boxes in the network:

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ ls testcase-1 daemon-8041 daemon-8042 daemon-8043 daemon-8044 daemon-8045 lockss.opt lockss.txt foo@bar:~/lockss-daemon/test/frameworks/run_stf$

daemon-8041 is the poller, the box that called the poll and tallied the result. You can see its log (an annotated version is here):

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ ls -l testcase-1/daemon-8041/test.out -rw-rw-r-- 1 foo foo 31399 Aug 5 13:56 testcase-1/daemon-8041/test.out foo@bar:~/lockss-daemon/test/frameworks/run_stf$

daemon-8042 through daemon-8045 are the voters, the boxes whose content is compared with the poller's. You can see their logs (an annotated version is here):

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ ls -l testcase-1/daemon-8042/test.out -rw-rw-r-- 1 foo foo 14755 Aug 5 13:56 testcase-1/daemon-8042/test.out foo@bar:~/lockss-daemon/test/frameworks/run_stf$

Now we clean up in preparation for the second demo:

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ ./clean.sh foo@bar:~/lockss-daemon/test/frameworks/run_stf$

In the second demo one of the daemons calls a poll, but before it does one file in its simulated content is damaged. The other 4 vote, and they all disagree with the poller about the damaged file. The poller requests a repair of this file from one of the voters. Once the repair is received, the poller re-tallies the poll and now finds 100% agreement. The logs end up in the usual place, annotated versions are available for the poller and a voter.

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ python testsuite.py AuditDemo2 11:16:24.793: INFO: ================================================ 11:16:24.793: INFO: Demo a basic V3 poll with repair via open access 11:16:24.793: INFO: ------------------------------------------------ 11:16:24.987: INFO: Starting framework in /home/foo/gamma/lockss-daemon/test/frameworks/run_stf/testcase-1 11:16:25.002: INFO: Waiting for framework to become ready 11:16:35.392: INFO: Creating simulated AU's 11:16:37.454: INFO: Waiting for simulated AU's to crawl 11:16:37.671: INFO: AU's completed initial crawl 11:16:38.320: INFO: Damaged the following node(s) on client localhost:8041: http://www.example.com/branch1/001file.txt 11:16:38.337: INFO: Waiting for a V3 poll to be called... 11:17:03.523: INFO: Successfully called a V3 poll 11:17:03.523: INFO: Waiting for V3 repair... 11:17:03.765: INFO: Asymmetric client localhost:8042 repairers OK 11:17:03.802: INFO: Asymmetric client localhost:8043 repairers OK 11:17:03.839: INFO: Asymmetric client localhost:8044 repairers OK 11:17:03.869: INFO: Asymmetric client localhost:8045 repairers OK 11:17:03.943: INFO: AU successfully repaired 11:17:04.945: INFO: No deadlocks detected >>> Delaying shutdown. Press Enter to continue... 11:17:15.661: INFO: Stopping framework ---------------------------------------------------------------------- Ran 1 test in 50.956s OK foo@bar:~/lockss-daemon/test/frameworks/run_stf$

Now we clean up in preparation for the third demo:

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ ./clean.sh foo@bar:~/lockss-daemon/test/frameworks/run_stf$

In the second demo, the simulated content was open access, so there was no restriction on the voter sending a repair to the poller. The common case is that the content is not open access, in which case the voter has to remember agreeing with the poller in the past about the AU being repaired so that it doesn't leak content to boxes that could not get it directly from the publisher.

In the third demo the daemons achieve agreement on the non-open access content before damage is created at the poller. Then when the poller next calls a poll, detects the damage and requests a repair, the voter remembers the prior agreement and sends a repair.

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ python testsuite.py AuditDemo3 11:18:05.527: INFO: ======================================================= 11:18:05.527: INFO: Demo a basic V3 poll with repair via previous agreement 11:18:05.527: INFO: ------------------------------------------------------- 11:18:05.722: INFO: Starting framework in /home/foo/gamma/lockss-daemon/test/frameworks/run_stf/testcase-1 11:18:05.743: INFO: Waiting for framework to become ready 11:18:21.199: INFO: Creating simulated AU's 11:18:23.138: INFO: Waiting for simulated AU's to crawl 11:18:23.355: INFO: AU's completed initial crawl 11:18:23.449: INFO: Waiting for a V3 poll by all simulated caches 11:18:48.653: INFO: Client on port 8041 called V3 poll... 11:18:48.694: INFO: Client on port 8042 called V3 poll... 11:18:48.732: INFO: Client on port 8043 called V3 poll... 11:18:48.764: INFO: Client on port 8044 called V3 poll... 11:18:48.814: INFO: Client on port 8045 called V3 poll... 11:18:48.814: INFO: Waiting for all peers to win their polls 11:18:48.891: INFO: Client on port 8041 won V3 poll... 11:18:48.972: INFO: Client on port 8042 won V3 poll... 11:18:49.072: INFO: Client on port 8043 won V3 poll... 11:18:49.157: INFO: Client on port 8044 won V3 poll... 11:18:49.248: INFO: Client on port 8045 won V3 poll... 11:18:50.347: INFO: Damaged the following node(s) on client localhost:8041: http://www.example.com/001file.bin http://www.example.com/001file.txt http://www.example.com/002file.bin http://www.example.com/002file.txt http://www.example.com/branch1/001file.bin http://www.example.com/branch1/001file.txt http://www.example.com/branch1/002file.bin http://www.example.com/branch1/002file.txt http://www.example.com/branch1/index.html http://www.example.com/index.html 11:18:50.375: INFO: Waiting for a V3 poll to be called... 11:19:25.638: INFO: Successfully called a V3 poll 11:19:25.714: INFO: Waiting for a V3 poll to be called... 11:19:25.742: INFO: Successfully called a V3 poll 11:19:25.742: INFO: Waiting for V3 repair... 11:19:26.871: INFO: Asymmetric client localhost:8042 repairers OK 11:19:26.871: INFO: Asymmetric client localhost:8042 repairers OK 11:19:26.872: INFO: Asymmetric client localhost:8042 repairers OK 11:19:26.872: INFO: Asymmetric client localhost:8042 repairers OK 11:19:26.919: INFO: Asymmetric client localhost:8043 repairers OK 11:19:26.919: INFO: Asymmetric client localhost:8043 repairers OK 11:19:26.919: INFO: Asymmetric client localhost:8043 repairers OK 11:19:26.920: INFO: Asymmetric client localhost:8043 repairers OK 11:19:26.955: INFO: Asymmetric client localhost:8044 repairers OK 11:19:26.955: INFO: Asymmetric client localhost:8044 repairers OK 11:19:26.955: INFO: Asymmetric client localhost:8044 repairers OK 11:19:26.956: INFO: Asymmetric client localhost:8044 repairers OK 11:19:26.999: INFO: Asymmetric client localhost:8045 repairers OK 11:19:26.999: INFO: Asymmetric client localhost:8045 repairers OK 11:19:26.999: INFO: Asymmetric client localhost:8045 repairers OK 11:19:26.999: INFO: Asymmetric client localhost:8045 repairers OK 11:19:27.083: INFO: AU successfully repaired 11:19:28.086: INFO: No deadlocks detected >>> Delaying shutdown. Press Enter to continue... 11:20:46.489: INFO: Stopping framework ---------------------------------------------------------------------- Ran 1 test in 161.058s OK foo@bar:~/lockss-daemon/test/frameworks/run_stf$

Finally we clean up again:

foo@bar:~/lockss-daemon/test/frameworks/run_stf$ ./clean.sh ; rm testsuite.opt foo@bar:~/lockss-daemon/test/frameworks/run_stf$

Change Process

Changes to this document require:

- Review by LOCKSS Engineering Staff

- Approval by LOCKSS Technical Manager

Relevant Documents

- CLOCKSS: Box Operations

- Petros Maniatis, Mema Roussopoulos, TJ Giuli, David S.H. Rosenthal, Mary Baker, and Yanto Muliadi. “LOCKSS: A Peer-to-Peer Digital Preservation System”, ACM Transactions on Computer Systems vol. 23, no. 1, February 2005, pp. 2-50. http://dx.doi.org/10.1145/1047915.1047917 accessed 2013.8.7